A few nights before Christmas, when, all through the house, not a distraction was stirring, not even a spouse, I posted about a technique that mixed up Resources and Data Binding in WPF, letting you use data binding to specify the key of the resource you wanted to use for a property. This trick helps to keep ViewModels unpolluted by Viewish things such as the URIs of images, while still retaining some control of those aspects - for example, over which Images are shown.

There was one thing I didn’t like about the technique that I showed: for every kind of Dependency Property that was to be the target of a data-bound resource key, you had to use my ResourceKeyBindingPropertyFactory to derive a new Dependency Property to hold the data binding.

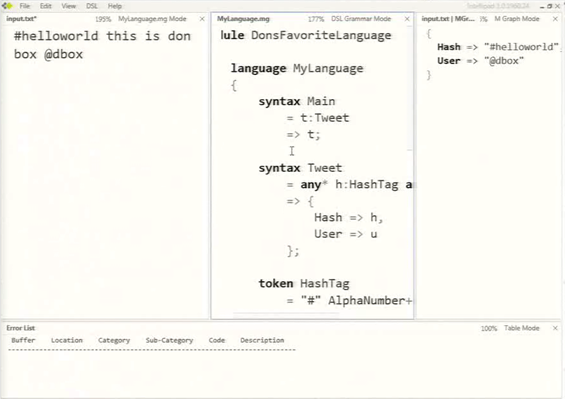

Today I’m going to show a much more elegant technique: the ResourceKeyBinding markup extension. In the following snippet, I’ve modified the example I showed last time so that it now uses my new ResourceKeyBinding to data bind the keys of the resources used for the caption on the buttons:

<DataTemplate>

<Button Command="{Binding}" Padding="2" Margin="2" Width="100" Height="100">

<StackPanel>

<Image HorizontalAlignment="Center"

Width="60"

app:ResourceKeyBindings.SourceResourceKeyBinding="{Binding Converter={StaticResource ResourceKeyConverter}, ConverterParameter=Image.{0}}"/>

<TextBlock Text="{ext:ResourceKeyBinding Path=Name, StringFormat=Caption.{0} }" HorizontalAlignment="Center" FontWeight="Bold" Margin="0,2,0,0"/>

</StackPanel>

</Button>

</DataTemplate>As you can see from line 7, you use ResourceKeyBinding in almost exactly the same way that you would use a normal Binding: not shown here are Source, RelativeSource or Element properties that work as you would expect; and, as per Binding, if all of these are omitted, the data source for the ResourceKeyBinding is the DataContext of the element. I’m also making use of the StringFormat capability of data bindings, which gets the value of the property indicated by Path and applies the given string format to it.

With this in place, the TextBlock should be given the appropriate value picked out from our amended App.xaml:

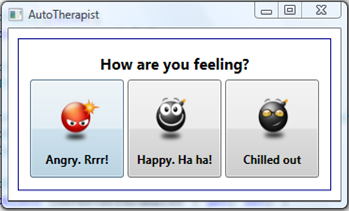

<Application.Resources> <BitmapImage x:Key="Image.AngryCommand" UriSource="Angry.png"/> <BitmapImage x:Key="Image.CoolCommand" UriSource="Cool.png"/> <BitmapImage x:Key="Image.HappyCommand" UriSource="Happy.png"/> <sys:String x:Key="Caption.Angry">Angry. Rrrr!</sys:String> <sys:String x:Key="Caption.Happy">Happy. Ha ha!</sys:String> <sys:String x:Key="Caption.Cool">Chilled out</sys:String> </Application.Resources>

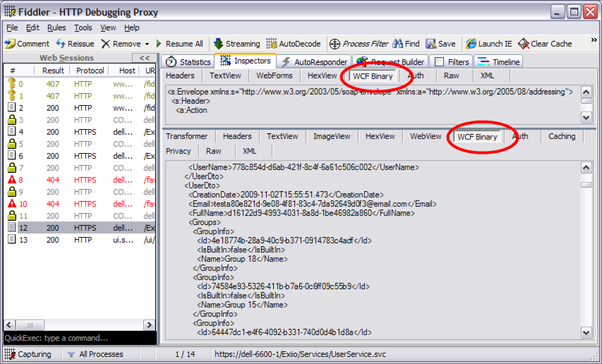

And sure enough, it is:

Behind the curtain

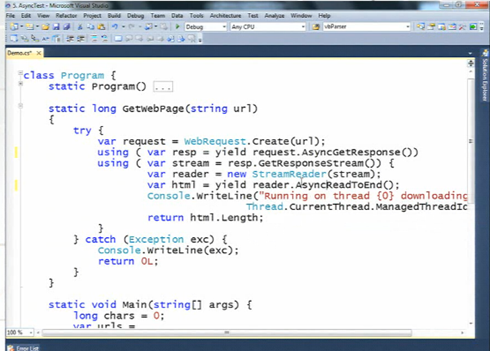

So how does it work? There are two parts to it. The first component is the markup extension itself:

public class ResourceKeyBindingExtension : MarkupExtension

{

public override object ProvideValue(IServiceProvider serviceProvider)

{

var resourceKeyBinding = new Binding()

{

BindsDirectlyToSource = BindsDirectlyToSource,

Mode = BindingMode.OneWay,

Path = Path,

XPath = XPath,

};

//Binding throws an InvalidOperationException if we try setting all three

// of the following properties simultaneously: thus make sure we only set one

if (ElementName != null)

{

resourceKeyBinding.ElementName = ElementName;

}

else if (RelativeSource != null)

{

resourceKeyBinding.RelativeSource = RelativeSource;

}

else if (Source != null)

{

resourceKeyBinding.Source = Source;

}

var targetElementBinding = new Binding();

targetElementBinding.RelativeSource = new RelativeSource()

{

Mode = RelativeSourceMode.Self

};

var multiBinding = new MultiBinding();

multiBinding.Bindings.Add(targetElementBinding);

multiBinding.Bindings.Add(resourceKeyBinding);

// If we set the Converter on resourceKeyBinding then, for some reason,

// MultiBinding wants it to produce a value matching the Target Type of the MultiBinding

// When it doesn't, it throws a wobbly and passes DependencyProperty.UnsetValue through

// to our MultiBinding ValueConverter. To circumvent this, we do the value conversion ourselves.

// See http://social.msdn.microsoft.com/forums/en-US/wpf/thread/af4a19b4-6617-4a25-9a61-ee47f4b67e3b

multiBinding.Converter = new ResourceKeyToResourceConverter()

{

ResourceKeyConverter = Converter,

ConverterParameter = ConverterParameter,

StringFormat = StringFormat,

};

return multiBinding.ProvideValue(serviceProvider);

}

[DefaultValue("")]

public PropertyPath Path { get; set; }

// [snipped rather uninteresting declarations for all the other properties]

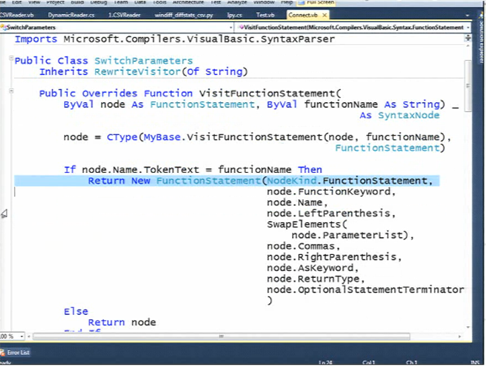

}Under the covers, ResourceKeyBindingExtension is being rather cunning. It constructs a MultiBinding with two child bindings: one binding is used to get hold of the resource key: this is initialised with the parameters that ResourceKeyBinding is given – the property path and data source, for example. The other child binding is set up with a RelativeSource mode of Self so that it grabs a reference to the ultimate target element (in the case of the example above, the TextBlock).

Every MultiBinding needs a converter, and we configure ours in line 45. The job of this converter is to use the resource key obtained by the second child binding to find the appropriate resource in the pool of resources available to the target element obtained by the first child binding – FrameworkElement.TryFindResource does the heavy lifting for us here:

class ResourceKeyToResourceConverter : IMultiValueConverter

{

// expects the target object as the first parameter, and the resource key as the second

public object Convert(object[] values, Type targetType, object parameter, System.Globalization.CultureInfo culture)

{

if (values.Length < 2)

{

return null;

}

var element = values[0] as FrameworkElement;

var resourceKey = values[1];

if (ResourceKeyConverter != null)

{

resourceKey = ResourceKeyConverter.Convert(resourceKey, targetType, ConverterParameter, culture);

}

else if (StringFormat != null && resourceKey is string)

{

resourceKey = string.Format(StringFormat, resourceKey);

}

var resource = element.TryFindResource(resourceKey);

return resource;

}

public object[] ConvertBack(object value, Type[] targetTypes, object parameter, System.Globalization.CultureInfo culture)

{

throw new NotImplementedException();

}

public IValueConverter ResourceKeyConverter { get; set; }

public object ConverterParameter { get;set;}

public string StringFormat { get; set; }

}You’ll notice that if ResourceKeyBinding is given a Converter or a StringFormat it doesn’t give these to resourceKeyBinding as you might expect. Instead it passes them on to the ResourceKeyToResourceConverter, which handles the conversion or string formatting itself. I’ve not done it this way just for fun: I found out the hard way that if you include a converter in any of the child Bindings of a MultiBinding, then WPF, rather unreasonably in my opinion, expects that converter to produce a result that is of the same Type as the property that the MultiBinding is targeting. If the Converter on the child Binding produces a result of some other type, then the MultiBinding passes DependencyProperty.UnsetValue to its converter rather than that result. There’s a forum thread discussing this behaviour but no real answer as to whether this is by design or a bug.

Watch this bug don’t getcha

One other gotcha with custom markup extensions, this one definitely a bug in Visual Studio. If you define a custom markup extension, and then, in Xaml that is part of the same assembly, you set one of the properties of that markup extension using a StaticResource you’ll get a compile-time error similar to:

Unknown property '***' for type 'MS.Internal.Markup.MarkupExtensionParser+UnknownMarkupExtension' encountered while parsing a Markup Extension.

The workaround, as Clint discovered, is either to put your markup extension in a separate assembly (which is what I’ve done) or use Property Element syntax for the markup extension in XAML.

Try it yourself

I’ve updated the code on the MSDN Code Gallery page – go see if for yourself.