I’m having great fun watching the Microsoft PDC 2009 session videos, and blogging the highlights for future reference. In case you want to jump into the video, I’ve included some time-checks in brackets (0:00). Read the rest of the series here.

Note that there is currently a problem with the encoding of the video linked to below which means you can’t seek to an arbitrary offset. Hopefully that will be fixed soon.

In a 45 minute lunch-time talk, Don Box (once he’d finished munching his lunch) and Jeff Pinkston gave an overview of the “M” language (quotes, or air-quotes if you’re talking, are apparently very important to the PDC presenters invoking the name “M”, because it is only a code-name, and the lawyers get angsty if people aren’t reminded of that).

“M” is a language for data (1:26). Since last year, Microsoft have combined the three components MGraph, MGrammar and MSchema into this one language “M” that can be used for data interchange, data parsing and data schema definition. It will be released under the Open Specification Promise (OSP) which effectively means that anybody can implement it freely.

As Don said, “M lives at the intersection of text avenue and data street” (2:56). This means that not only is M a language for working with data, but M itself can be represented in text or in the database.

At PDC last year, Microsoft demonstrated “M” being used as an abstraction layer above T-SQL, and also as a language for defining Grammar specifications. This year, they are introducing “M” as a way of writing Entity Framework data models (4:00).

Getting into the detail, Don started by describing how M can be used to write parsers: functions that turn text into structured data (5:02). You use M to define your language in terms of Token rules and Syntax rules (6:02): Token rules describe a patterns that sequences of characters should match, and Syntaxes are sequences of tokens. Apparently there are a few innovations in the “M” language: it uses a GLR parser and it can have embedded token sets to allow, for example, XML and JSON to be used in the same language (6:40) [sorry I can’t make it clearer than that - Don rushed through this part].

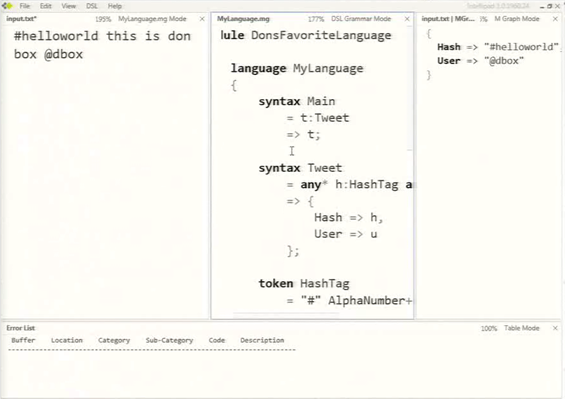

Then came a demo (8:15) in which Don and Jeff showed how a language can be interactively defined in Intellipad, with Intellipad even doing real-time syntax checking on any sample input text you provide it with.

Notice from the screenshot how you can define pattern variables in your syntaxes (and also in tokens) and then use these in expressions to structure the output of your language: in syntax Tweet for example, the token matching HashTag is assigned to variable h, and then the output of the syntax (the part following the =>) is output under the name “Hash”. Integration with Visual studio has also been improved to make it easy to load a language, use it to parse some input, and then work with the structured output in your code.

Next Don talked a bit about typing in “M” (22:10). “M” uses structural typing. Think of this as being a bit like duck typing: if two types have members with the same name and same types then they are equivalent. M has a number of intrinsic types (logical, number, text, etc.) and a number of “data compositors” – ways of combining types together – like collections, sequences and records.

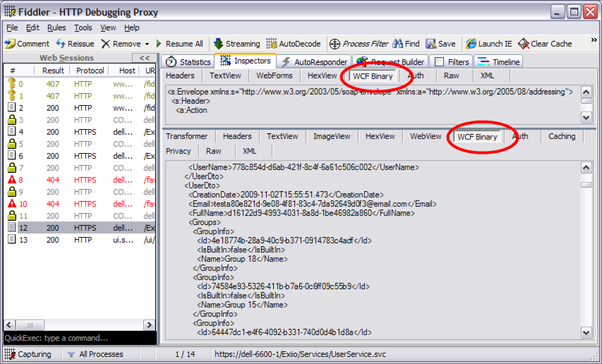

Don followed this up with a demo of “Quadrant” (there go the air-quotes again) (25:36), showing how this can be used to deploy schemas defined in “M” to the database. “M” is tightly integrated into “Quadrant: you can type in “M” expressions and it will execute them directly against your database, showing the results in pretty tables (34:50).

Don finished off by talking about how M can be used for defining EDM models (34:19): many people prefer describing models in text rather than in a designer, especially since performance in the EDM designer suffers with large models.