Fancy earning a Lotto prize of 1.2 quadrillion dollars? Then you should move to Zimbabwe! Don't be in too much of a hurry, though. By the time you'd brought it back home, your Zimbabwe-dollar haul would have shrunk to less than 4,000 US Dollars.

Fancy earning a Lotto prize of 1.2 quadrillion dollars? Then you should move to Zimbabwe! Don't be in too much of a hurry, though. By the time you'd brought it back home, your Zimbabwe-dollar haul would have shrunk to less than 4,000 US Dollars.

A couple of weeks back the BBC published an article talking about the problem of big numbers in Zimbabwe. Due to daily expenses measured in the trillions of dollars, with hyperinflation of 2,200,000% pushing that figure ever higher, the citizens of Zimbabwe have been googling to find the names for the next big numbers after trillions. Coincidentally, I was doing the same thing the day before I read the article as I went about solving Project Euler Problem 17.

The problem wants to know how many letters are needed to write out the numbers 1 to 1000 in words. Clearly this calls for an algorithm to convert numbers to words; since I always like to give a little extra to my loyal readers, I wanted to create an algorithm to handle numbers bigger than 1000. I ended up with one that can convert anything up to long.MaxValue: in words, nine quintillion, two hundred and twenty-three quadrillion, three hundred and seventy-two trillion, thirty-six billion, eight hundred and fifty-four million, seven hundred and seventy-five thousand, eight hundred and seven! Plenty powerful enough to write out Bill Gate's paychecks, and sufficient to keep the Zimbabweans going for the next few months!

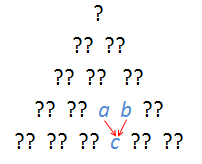

So how does the algorithm work? A basic ingredient is a dictionary mapping the basic numbers to words. This covers the numbers 1 to 20, then every multiple of ten up to 100, then each larger number that has a special name. The key ingredient is a recursive function (functional programming had to come into it somewhere) that starts with the largest part of a number and repeatedly breaks it down into its components.

In Pseudo-code:

- Let n be the number to process

- For numbers smaller than 0, combine "Negative" with the result of calling the algorithm on the absolute value of n

- For n between 0 and 20, look up the result in the word dictionary

- For n between 20 and 100, calculate the number of 10s and number of units; look up the name for the number of tens; if there are any units, look up the appropriate name and combine the two results with a hyphen

- For n between 100 and 1000, calculate the number of 100s and the remainder; look up the name for the number of hundreds; if there is any remainder, recurse to get its wordy representation, then combine it with result for the hundreds using "and" to join the two parts

- For n bigger than 1000: decide which is the biggest named number (million, billion, etc.) that divides into n. Calculate the number of that base unit and the remainder. Recurse to convert the number of base units into words, and recurse to convert the remainder into words. Combine the two parts using ","

In C#:

public static class ConvertToWordsExtension

{

private static Dictionary<long, string> _wordDictionary;

private const int OneThousand = 1000;

private const long OneMillion = 1000000;

private const long OneBillion = 1000000000;

private const long OneTrillion = 1000000000000;

private const long OneQuadrillion = 1000000000000000;

private const long OneQuintillion = 1000000000000000000;

/// <summary>

/// Converts a number to its English representation in words.

/// </summary>

/// <remarks>Uses the Short Scale for large numbers. See http://en.wikipedia.org/wiki/Long_and_short_scales for more details</remarks>

public static string ConvertToWords(this long number)

{

EnsureWordDictionaryInitialised();

if (number == long.MinValue)

{

throw new ArgumentOutOfRangeException();

}

return ConvertToWordsCore(number);

}

/// <summary>

/// Converts a number to its English representation in words

/// </summary>

public static string ConvertToWords(this int number)

{

return ConvertToWords((long)number);

}

private static Dictionary<long, string> CreateWordDictionary()

{

return new Dictionary<long, string>

{

{0, "zero"},

{1, "one"},

{2, "two"},

{3, "three"},

{4, "four"},

{5, "five"},

{6, "six"},

{7, "seven"},

{8, "eight"},

{9, "nine"},

{10, "ten"},

{11, "eleven"},

{12, "twelve"},

{13, "thirteen"},

{14, "fourteen"},

{15, "fifteen"},

{16, "sixteen"},

{17, "seventeen"},

{18, "eighteen"},

{19, "nineteen"},

{20, "twenty"},

{30, "thirty"},

{40, "forty"},

{50, "fifty"},

{60, "sixty"},

{70, "seventy"},

{80, "eighty"},

{90, "ninety"},

{100, "hundred"},

{OneThousand, "thousand"},

{OneMillion, "million"},

{OneBillion, "billion"},

{OneTrillion, "trillion"},

{OneQuadrillion, "quadrillion"},

{OneQuintillion, "quintillion"}

};

}

private static void EnsureWordDictionaryInitialised()

{

// ensure thread-safety when caching our word dictionary

// note: this doesn't prevent two copies of the word dictionary

// being initialised - but that doesn't matter; only one would be

// cached, the other garbage collected.

if (_wordDictionary == null)

{

var dictionary = CreateWordDictionary();

Interlocked.CompareExchange(ref _wordDictionary, dictionary, null);

}

}

private static string ConvertToWordsCore(long number)

{

if (number < 0)

{

return "negative " + ConvertToWordsCore(Math.Abs(number));

}

// if between 1 and 19, convert to word

if (0 <= number && number < 20)

{

return _wordDictionary[number];

}

// if between 20 and 99, convert tens to word then recurse for units

if (20 <= number && number < 100)

{

return ProcessTens(number, _wordDictionary);

}

// if between 100 and 999, convert hundreds to word then recurse for tens

if (100 <= number && number < OneThousand)

{

return ProcessHundreds(number, _wordDictionary);

}

if (OneThousand <= number && number < OneMillion)

{

return ProcessLargeNumber(number, OneThousand, _wordDictionary);

}

if (OneMillion <= number && number < OneBillion)

{

return ProcessLargeNumber(number, OneMillion, _wordDictionary);

}

if (OneBillion <= number && number < OneTrillion)

{

return ProcessLargeNumber(number, OneBillion, _wordDictionary);

}

if (OneTrillion <= number && number < OneQuadrillion)

{

return ProcessLargeNumber(number, OneTrillion, _wordDictionary);

}

if (OneQuadrillion <= number && number < OneQuintillion)

{

return ProcessLargeNumber(number, OneQuadrillion, _wordDictionary);

}

else

{

return ProcessLargeNumber(number, OneQuintillion, _wordDictionary);

}

}

private static string ProcessLargeNumber(long number, long baseUnit, Dictionary<long, string> wordDictionary)

{

// split the number into number of baseUnits (thousands, millions, etc.)

// and the remainder

var numberOfBaseUnits = number / baseUnit;

var remainder = number % baseUnit;

// apply ConvertToWordsCore to represent the number of baseUnits as words

string conversion = ConvertToWordsCore(numberOfBaseUnits) + " " + wordDictionary[baseUnit];

// recurse for any remainder

conversion += remainder <= 0 ? ""

: (remainder < 100 ? " and " : ", ") + ConvertToWordsCore(remainder);

return conversion;

}

private static string ProcessHundreds(long number, Dictionary<long, string> wordDictionary)

{

var hundreds = number / 100;

var remainder = number % 100;

string conversion = wordDictionary[hundreds] + " " + wordDictionary[100];

conversion += remainder > 0 ? " and " + ConvertToWordsCore(remainder) : "";

return conversion;

}

private static string ProcessTens(long number, Dictionary<long, string> wordDictionary)

{

Debug.Assert(0 <= number && number < 100);

// split the number into the number of tens and the number of units,

// so that words for both can be looked up independantly

var tens = (number / 10) * 10;

var units = number % 10;

string conversion = wordDictionary[tens];

conversion += units > 0 ? "-" + wordDictionary[units] : "";

return conversion;

}

}Having coded the algorithm like that, I was casting around to find a way of making the ConvertToWordsCore method more compact. That was when I came across Igor Ostrovsky's post on the ternary conditional operation ("?") and was reminded of a neat trick. I re-wrote the method like this:

private static string ConvertToWordsCore(long number)

{

return

number < 0 ? "negative " + ConvertToWordsCore(Math.Abs(number))

: 0 <= number && number < 20 ? _wordDictionary[number]

: 20 <= number && number < 100 ? ProcessTens(number, _wordDictionary)

: 100 <= number && number < OneThousand ? ProcessHundreds(number, _wordDictionary)

: OneThousand <= number && number < OneMillion ? ProcessLargeNumber(number, OneThousand, _wordDictionary)

: OneMillion <= number && number < OneBillion ? ProcessLargeNumber(number, OneMillion, _wordDictionary)

: OneBillion <= number && number < OneTrillion ? ProcessLargeNumber(number, OneBillion, _wordDictionary)

: OneTrillion <= number && number < OneQuadrillion ? ProcessLargeNumber(number, OneTrillion, _wordDictionary)

: OneQuadrillion <= number && number < OneQuintillion ? ProcessLargeNumber(number, OneQuadrillion, _wordDictionary)

: ProcessLargeNumber(number, OneQuintillion, _wordDictionary); // long.Max value is just over nine quintillion

}That's much more compact, and to my eyes, a lot more elegant. The only downside I can see is that debugging might be more difficult. Which form do you prefer?

With this algorithm in place, solving Project Euler 17 is trivial:

[EulerProblem(17, Title="How many letters would be needed to write all the numbers in words from 1 to 1000?")]

public class Problem17

{

public long Solve()

{

return

1.To(1000)

.Select(

number => number

.ConvertToWords()

.ToCharArray()

.Count(character => char.IsLetter(character))

)

.Sum();

}

}As always, full code (including some unit tests for the ConvertToWords method!) is available on my Project Euler code gallery page. You can also try out the algorithm in my handy online utility to convert numbers to words.

Update 27/5/09:Fixed bug where 'and' was missed out in numbers like 1006