Google Chrome is magic: getting ready for my summer holiday to Brugge, I hit the city’s homepage. A little bar popped up at the top of the page: “This page is in Dutch. Would you like to translate it?”

How does Chrome know that? I suppose the domain name “.be” is one clue, but they speak French as well as Dutch in Belgium, so that by itself is not enough. Chrome does the same trick for pages written in a whole raft of languages, from Afrikaans to Yiddish.

I don’t know what Google’s secret sauce is, but I can show you one simple and surprisingly effective technique with an elegant implementation using LINQ in C#.

Studies in Linguistic Geometry

It is built around the observation that in each sufficiently large sample of text in a given language, letters of the alphabet will all occur with roughly the same frequencies. In English for example, the letter ‘e’ tops the popularity charts, making up about 13% of the average body of text. ‘t’ and ‘a’ are next, accounting for 9% and 8%. By contrast, in Portuguese, ‘a’ is the most frequent, at 15%, with ‘e’ and ‘o’ close behind.

So letter frequencies can be used as a kind of signature, or DNA profile, for a language. But given a sample of text, how do we decide which language signature it matches most closely? This is where we switch from linguistics to geometry.

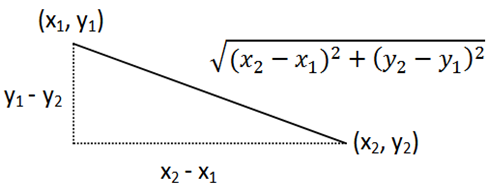

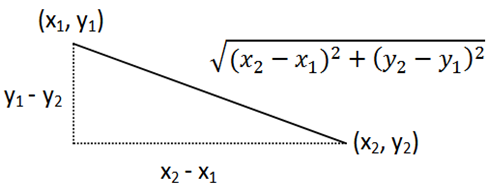

In 2-dimensional space, we calculate the distance between two points using Pythagoras’ theorem.  The same thing works for two points in the real, 3-d, world. The distance from (x1, y1, z1) to (x2, y2, z2) is

The same thing works for two points in the real, 3-d, world. The distance from (x1, y1, z1) to (x2, y2, z2) is

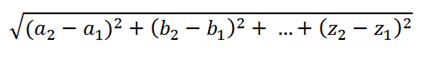

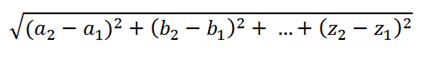

But why stop there? What about the distance between two points in 26-dimensional space? You guessed it!

26-d space? Why bring that into the equation? Well think about those language signatures, giving the frequency of occurrence of each letter. You can reinterpret those signatures as points in 26-d space: the point representing the signature for English would be at 0.13 along the ‘e’ axis, 0.09 along the ‘t’ axis, 0.08 along the ‘a’ axis, and so on.

This then gives us our straightforward method of determining which language our sample text belongs to. We do a frequency analysis on its letters, then imagine the result of that analysis as a point in 26-d space, and work out which language signature it lies closest to using Pythagoras. Easy!

So how does it look in LINQ?

Linq to Linguistics

We start off with the frequency analysis (taking care to normalise the text to lower-case):

IEnumerable<CharacterFrequency> CalculateCharacterFrequencies(string sample)

{

var characterFrequencies = sample

.Select(char.ToLower)

.GroupBy(c => c)

.Select(group => new CharacterFrequency

{

Character = group.Key,

Frequency = (double)group.Count() / sample.Length

});

return characterFrequencies;

}

Next we introduce a LanguageSignature class to hold the signature of each language:

[DebuggerDisplay("Language = { Language }")]

public class LanguageSignature

{

private IDictionary<char, double> _frequencies;

public LanguageSignature(string language, IDictionary<char, double> characterFrequencies)

{

Language = language;

_frequencies = characterFrequencies;

}

public string Language { get; protected set; }

public double GetFrequency(char character)

{

double frequency;

return _frequencies.TryGetValue(character, out frequency) ? frequency : 0;

}

}

Here are some snippets of sample signatures, data courtesy of Wikipedia

public static class LanguageSignatures

{

public static LanguageSignature English =

new LanguageSignature(

"English",

new Dictionary<char, double>

{

{'a', 0.08167},

{'b', 0.01492},

{'c', 0.02782},

/// etc.

});

public static LanguageSignature French =

new LanguageSignature(

"French",

new Dictionary<char, double>

{

{'a', 0.07636},

{'b', 0.00901},

// etc.

});

}

Now we do our sums in 26-d space, ignoring any letters that we don’t have frequency data for (note that Sqrt is a little extension method I created on the Double class that simply calls Math.Sqrt):

double CalculateDistanceFromSignature(LanguageSignature signature, IEnumerable<CharacterFrequency> characterFrequencies)

{

var distance = characterFrequencies

.Where(characterFrequency => signature.GetFrequency(characterFrequency.Character) > 0)

.Select(characterFrequency

=> Math.Pow(characterFrequency.Frequency - signature.GetFrequency(characterFrequency.Character), 2))

.Sum()

.Sqrt();

return distance;

}

Now we’re ready to determine which language our sample is closest to:

public LanguageSignature DetermineMostLikelyLanguage(string sample, IEnumerable<LanguageSignature> signatures)

{

var characterFrequencies = CalculateCharacterFrequencies(sample);

var closestLanguage = signatures.Select(signature => new

{

Language = signature,

Distance = CalculateDistanceFromSignature(signature, characterFrequencies)

})

.MinItem(languageDistance => languageDistance.Distance);

return closestLanguage.Language;

}

MinItem is an extension method I knocked up that returns the item from a sequence that yields the smallest value from the expression you supply (in this case, the smallest distance):

public static T MinItem<T, TCompare>(this IEnumerable<T> sequence, Func<T, TCompare> comparatorSelector) where TCompare : IComparable<TCompare>

{

var minItem = sequence.Aggregate(

sequence.First(),

(current, min) => comparatorSelector(current).CompareTo(comparatorSelector(min)) < 0 ? current : min);

return minItem;

}

And we’re done.

Testing, Testing, Un, deux, trois

To prove it, I created a little test that analysed samples of texts in several languages taken from Project Gutenberg (the test uses MbUnit’s very handy XmlData feature to read the different test cases from my xml file).

public class LanguageAnalyserTests

{

[Test]

[XmlData("//Sample", FilePath = "ProblemData\\LanguageSamples.xml")]

public void TestAnalysis([Bind("Text")]string text, [Bind("@Language")]string expectedLanguage)

{

var analyser = new LanguageAnalyser();

var determinedLanguage = analyser.DetermineMostLikelyLanguage(

text

new[] { LanguageSignatures.English, LanguageSignatures.French, LanguageSignatures.German, LanguageSignatures.Dutch, LanguageSignatures.Spanish, LanguageSignatures.Italian });

Assert.AreEqual(expectedLanguage, determinedLanguage.Language);

}

}

In my test I’ve got 9 samples in German, English, French, Dutch, Spanish and Italian, extracted at random from the Gutenberg archives and each sample is correctly identified by my analyser. I also did a little experiment to see how much text it needed to correctly distinguish the samples. Limiting the samples to 600 characters caused some confusion between Spanish and English, but 700 characters was sufficient in my tests to enable the analyser to pick the right language for all samples.

A Challenge

I actually developed this LanguageAnalyser to help me solve a problem on Project Euler. Which one was it? Answers in the comments please, or by email, and bonus marks to anybody who can guess what my solution looks like. Check back Monday for the answer, and for full source code to today’s post.

Update: The answer's here, and the source code is now on MSDN Code Gallery